Google’s ‘Neural Machine Translation’ for Indian languages: Here’s what it means

Google announced Neural Machine Translations for Indian languages, and here's what it will mean for users

Written by Shruti Dhapola | New Delhi | Updated: April 25, 2017 6:53 pm

Melvin Johnson, who is an engineer and researcher at Google’s Translate.

Melvin Johnson, who is an engineer and researcher at Google’s Translate.

Google just announced a big step on how translations will now work for Indian languages. Its Neural Machine Translation (NMT) is being rolled out for nine languages in India; Hindi, Bengali, Marathi, Tamil, Telugu, Gujarati, Punjabi, Malayalam and Kannada. This new system of translations will also power how the auto-translate works on Google Chrome and reviews feature on Google Maps.

When it comes to machine learning and artificial intelligence, Google is recognised as the undisputed leader, and the languages bit is part of this larger effort. So what exactly does NMT mean for Indian languages on the web, and why does this new system of translation matter? We explain below.

What exactly is Neural Machine Translation?

To put it simply, Google’s Neural Machine Translation rely on deep learning neural networks to carry out translations. It is a multilingual model, where the system is taught to translate between more than one pair of language.

Google started the project in 2015 using its own TensorFlow machine learning library to see how it could improve translations done by computers. TensorFlow is Google’s open source library for machine learning.

As Google’s Research Engineer Melvin Johnson explained to the media, a neural network is modelled on the human brain. Just like in the human brain responds to external stimuli, these deep learning neural networks are taught to respond to certain inputs.

Google announced Neural Machine translations for nine Indian languages.

Google announced Neural Machine translations for nine Indian languages.

In case of Neural Machine Translation (NMT) networks, these systems are taught the languages and are an ‘end-to-end system.’ The system is fed sentences from languages, which are to be translated. For instance, for a Hindi to English translation, the system is taught the same sentences in Hindi and its counterpart in English in order to understand the translation.

The system is similar to how Google is teaching computers to recognise images. In this case, the network is fed millions and millions of images of a particular object, like say a cat, until it can recognise on its own what identifies as a cat.

So how is the new NMT-based system better than what Google was doing earlier?

Google says the newer system of translation is much better qualitatively, and faster. The older system of translation for computers required these to be done phrase by phrase, and given that Google supports 103 languages, it made the process slower and tedious.

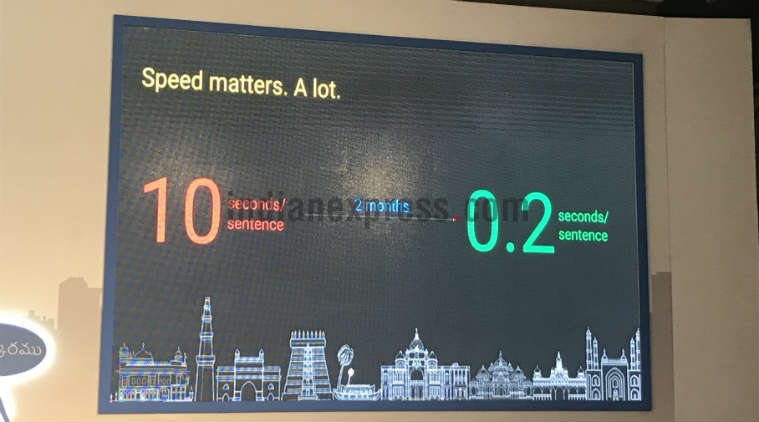

However, the new multilingual NMT system is much faster given the same model can be taught multiple languages, and allows Google to scale up much faster. It also learns translations based on sentence to sentence, rather than just phrase to phrase. Google says it is much more accurate, and closer to human-based translations than the previous system and improves translations from 10 seconds per sentence to 0.2 seconds per sentences.

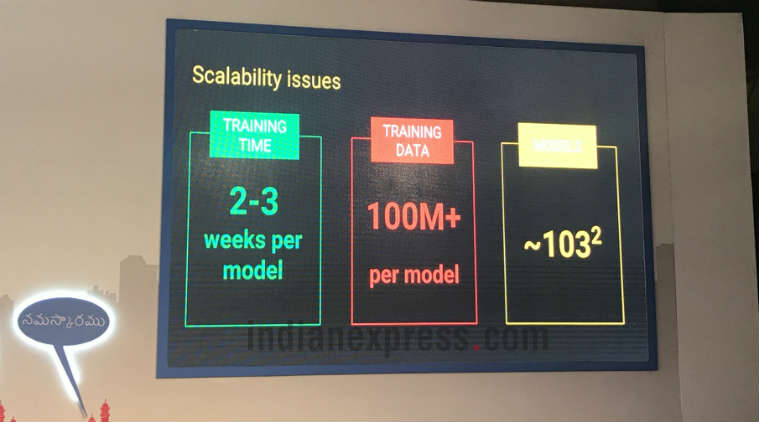

Some of the scaling challenges for Google’s NMT based translations.

Some of the scaling challenges for Google’s NMT based translations.

The new TPUs (TensorFlow Processing Units) that Google is using for these neural translations have also improved speed considerably, says the company, as these are specifically designed to support such operations. The idea with NMT is to bridge the gap between human and computer translations.

So is the system perfect? Or does it have some challenges still?

One has to remember than machine learning, be it around translation or image recognition is still in its infancy stages. As Johnson explains the NMT system for translation does face some challenges, still especially when it comes to India, which has so many regional languages.

Remember the system needs to be fed sentences in order to understand and recognise the language. It needs a parallel sentence in both English and Hindi, if that’s the pair that has to be translated by the machine.

The new system allows for much faster translation of sentences.

The new system allows for much faster translation of sentences.

As Johnson pointed out at times finding parallel content on the web is a challenge (this being content in a regional language). He calls it a “small cherry on top,” given English content dominates the web. This makes the task of training machines to do the translation much harder. Google will need more data, more content in regional languages in order to improve how this system fares.

Then there’s the issue of nuance and tone in translations, which humans can interpret and understand, but teaching that to a machine will be much harder.

What will it mean in the future for users?

The idea with the model isn’t just translating two pairs of language, like say Hindi to English. Eventually it could mean that Google Translate is able to translate Hindi to Tamil directly, without actually being taught this specifically.

In fact, in November last year, Google had revealed that its system was able to do ‘zero-shot translation’ or rather translate pairs of language where it wasn’t specifically taught to do this. Google had revealed the system figured out translations between Korean and Japanese. In this field of machine learning, this means a significant leap, because the system has figured out on its own how to translate. That could make all the difference in the future.

For all the latest Tech News, download Indian Express App now

First Published on: April 25, 2017 6:35 pm

© IE Online Media Services Pvt Ltd